前言 关于scrub这块一直想写一篇文章的,这个在很久前,就做过一次测试,当时是看这个scrub到底有多大的影响,当时看到的是磁盘读占很高,启动deep-scrub后会有大量的读,前端可能会出现 slow request,这个是当时测试看到的现象,一个比较简单的处理办法就是直接给scrub关掉了,当然关掉了就无法检测底层到底有没有对象不一致的问题

最近在ceph群里看到一段大致这样的讨论:

scrub是个坑

小文件多的场景一定要把scrub关掉

上面的说法有没有问题呢?在一般情况下来看,确实如此,但是我们是否能尝试去解决下这个问题,或者缓解下呢?那么我们就来尝试下

scrub的一些追踪 下面的一些追踪并不涉及代码,仅仅从配置和日志的观测来看看scrub到底干了什么

环境准备 我的环境为了便于观测,配置的是一个pg的存储池,然后往这个pg里面put了100个对象,然后对这个pg做deep-scrub,deep-scrub比scrub对磁盘的压力要大些,所以本篇主要是去观测的deep-scrub

开启对pg目录的访问的监控 使用的是inotifywait,我想看下deep-scrub的时候,pg里面的对象到底接收了哪些请求

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 inotifywait -m 1.0_head

在给osd.0开启debug_osd=20后观测chunky相关的日志

1 2 3 4 5 6 [root@lab8106 ceph]# cat ceph-osd.0.log |grep chunky:1|grep handle_replica_op

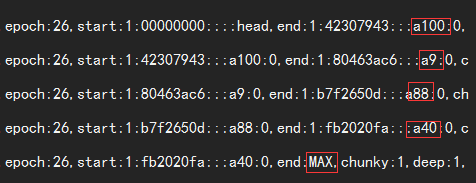

截取关键部分看下,如图

1 2 3 4 25:1.0_head/ ACCESS a100__head_C29E0C42__1

看上去是不是很有规律,这个地方在ceph里面会有个chunk的概念,在做scrub的时候,ceph会对这个chunk进行加锁,这个可以在很多地方看到这个,这个也就是为什么有slow request,并不一定是你的磁盘慢了,而是加了锁,就没法读的

osd scrub chunk min

Description: The minimal number of object store chunks to scrub during single operation. Ceph blocks writes to single chunk during scrub.

从配置文件上面看说是会锁住写,没有提及读的锁定的问题,那么我们下面验证下这个问题,到底deep-scrub,是不是会引起读的slow request

上面的环境100个对象,现在把100个对象的大小调整为100M一个,并且chunk设置为100个对象的,也就是我把我这个环境所有的对象认为是一个大的chunk,然后去用rados读取这个对象,来看下会发生什么

1 2 osd_scrub_chunk_min = 100

使用ceph -w监控

1 2 3 2017-08-19 00:19:26.045032 mon.0 [INF] pgmap v377: 1 pgs: 1 active+clean+scrubbing+deep; 10000 MB data, 30103 MB used, 793 GB / 822 GB avail

我从deep scrub 一开始就进行a40对象的get rados -p rbd get a40 a40,直接就卡着不返回,在pg内对象不变的情况下,对pg做scrub的顺序是不变的,我专门挑了我这个scrub顺序下最后一个scrub的对象来做get,还是出现了slow request ,这个可以证明上面的推断,也就是在做scrub的时候,对scub的chunk的对象的读取请求也会卡死,现在我把我的scrub的chunk弄成1看下会发生什么

配置参数改成

1 2 osd_scrub_chunk_min = 1

1 2 3 4 5 watch -n 1 'rados -p rbd get a9 a1'

使用五个请求同时去get a9,循环的去做

然后做deep scrub,这一次并没有出现slow request 的情况

###另外一个重要参数

osd scrub sleep

Description: Time to sleep before scrubbing next group of chunks. Increasing this value will slow down whole scrub operation while client operations will be less impacted.

可以看到还有scrub group这个概念,从数据上分析这个group 是3,也就是3个chunks

osd_scrub_sleep = 5

然后再次做deep-scrub,然后看下日志的内容

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 cat /var/log/ceph/ceph-osd.0.log |grep be_deep_scrub|awk '{print $1,$2,$28}'|less

可以看到1s做一个对象的deep-scrub,然后在做了3个对象后就停止了5s

默认情况下的scrub和修改后的对比 我们来计算下在修改前后的情况对比,我们来模拟pg里面有10000个对象的情况小文件 测试的文件都是1K的,这个可以根据自己的文件模型进行测试

假设是海量对象的场景,那么算下来单pg 1w左右对象左右也算比较多了,我们就模拟10000个对象的场景的deep-scrub

1 cat /var/log/ceph/ceph-osd.0.log |grep be_deep_scrub|awk '{print $1,$2,$28}'|awk '{sub(/.*/,substr($2,1,8),$2); print $0}'|uniq|awk '{a[$1," ",$2]++}END{for (j in a) print j,a[j]|"sort -k 1"}'

使用上面的脚本统计每秒scrub的对象数目

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 2017-08-19 01:23:33 184

可以看到1s 会扫300个对象左右,差不多40s钟就扫完了一个pg,默认25个对象一个trunk

这里可以打个比喻,在一条长为40m的马路上,一个汽车以1m/s速度前进,中间会有人来回穿,如果穿梭的人只有一两个可能没什么问题,但是一旦有40个人在这个区间进行穿梭的时候,可想而知碰撞的概率会有多大了

或者同一个文件被连续请求40次,那么对应到这里就是40个人在同一个位置不停的穿马路,这样撞上的概率是不是非常的大了?

上面说了这么多,那么我想如果整个看下来,应该知道怎么处理了

1 2 3 osd_scrub_chunk_min = 1

这里减少chunk大小,相当于减少上面例子当中汽车的长度,原来25米的大卡车,变成1米的自行车了

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 [root@lab8106 ceph]# cat /var/log/ceph/ceph-osd.0.log |grep be_deep_scrub|awk '{print $1,$2,$28}'

上面从日志里面截取部分的日志,这个是什么意思呢,是每秒钟扫描3个对象,然后休息3s再进行下一个,这个是不是已经把速度压到非常低了?还有上面做测试scrub sleep例子里面好像是1s 会scrub 1个对象,这里怎么就成了1s会scrub 3 个对象了,这个跟scrub的对象大小有关,对象越大,scrub的时间就相对长一点,这个测试里面的对象是1K的,基本算非常小了,也就是1s会扫描3个对象,然后根据你的设置的sleep值等待进入下一组的scrub

在上面的环境下默认每秒钟会对300左右的对象进行scrub,以25个对象的锁定窗口移动,无法写入和读取,而参数修改后每秒有3个对象被scrub,以1个对象的锁定窗口移动,这个单位时间锁定的对象的数目已经降低到一个非常低的程度了,如果你有生产环境又想去开scrub,不妨尝试下降低chunk,增加sleep

这个的影响就是扫描的速度而已,而如果你想加快扫描速度,就去调整sleep参数来控制这个扫描的速度了,这个就不在这里赘述了

本篇讲述的是一个PG上开启deep-scrub以后的影响,默认的是到了最大的intelval以后就会开启自动开启scrub了,所以我建议的是不用系统自带的时间控制,而是自己去分析的scrub的时间戳和对象数目,然后计算好以后,可以是每天晚上,扫描指定个数的PG,然后等一轮全做完以后,中间就是自定义的一段时间的不扫描期,这个可以自己定义,是一个月或者两个月扫一轮都行,这个会在后面单独写一篇文章来讲述这个

总结 关于scrub,你需要了解,scrub什么时候会发生,发生以后会对你的osd产生多少的负载,每秒钟会扫描多少对象,如何去降低这个影响,这些问题就是本篇的来源了,很多问题是能从参数上进行解决的,关键是你要知道它们到底在干嘛

变更记录

Why

Who

When

创建

武汉-运维-磨渣

2017-08-19